"A computer, to print out a fact, Will divide, multiply, and subtract. But this output can be No more than debris, If the input was short of exact." - Gigo

In recent years, there has been a surge in interest surrounding artificial intelligence (AI), particularly when it comes to machine learning techniques, which have shown immense potential for revolutionizing various industries such as healthcare, finance, and transportation

In recent years, there has been a surge in interest surrounding artificial intelligence (AI), particularly when it comes to machine learning techniques, which have shown immense potential for revolutionizing various industries such as healthcare, finance, and transportation. These algorithms are designed to analyze vast amounts of data in order to "learn" and make predictions or decisions with a certain degree of accuracy, often surpassing the capabilities of humans.

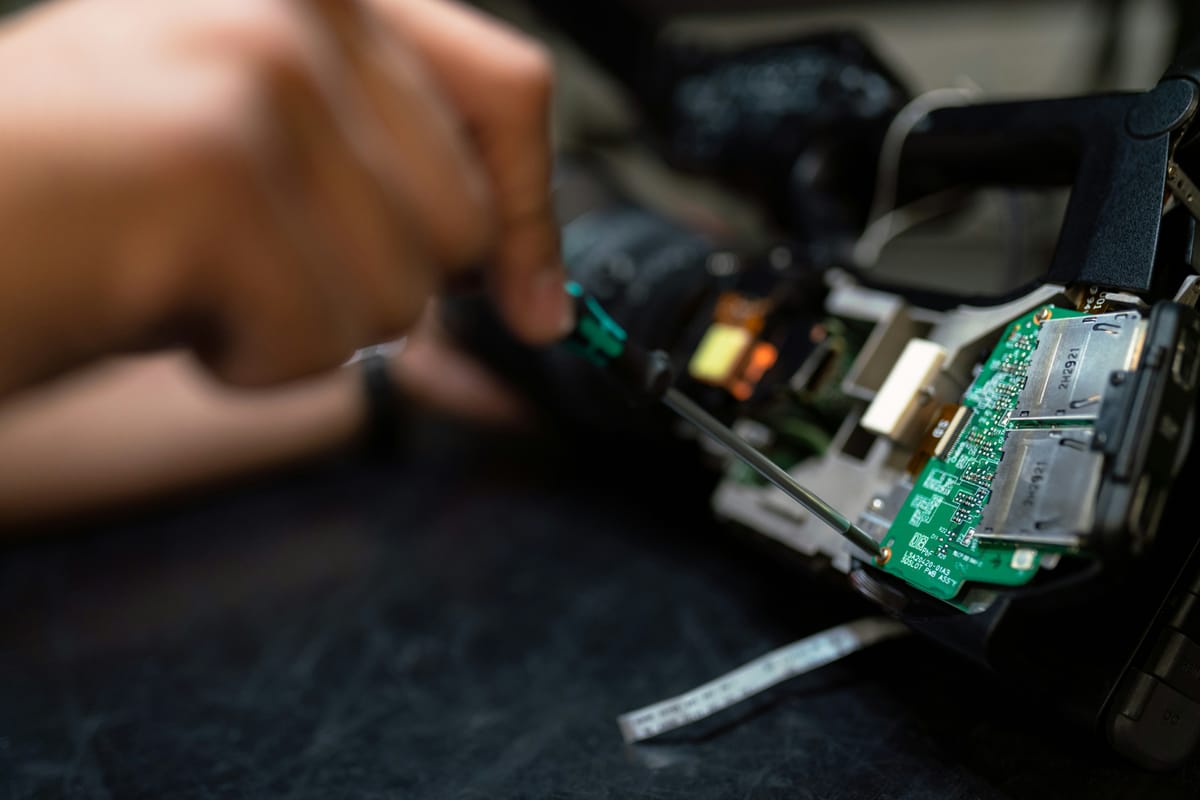

The underlying technology that drives these AI applications is built upon the fundamental mathematical operations of division, multiplication, and subtraction, which are employed to process data. However, it has been widely acknowledged within the scientific community that the accuracy of these computations heavily relies on the quality of the input data provided. The more precise and comprehensive the information, the more reliable and accurate the output will be.

This is where a significant problem arises: the potential for misinformation or incomplete datasets to influence AI-driven decisions. In today's interconnected digital ecosystem, it is increasingly challenging to ensure that all of the data being processed by these algorithms are accurate. The consequences can range from minor inconveniences to catastrophic failures in critical systems, such as autonomous vehicles or healthcare diagnostics.

The challenge of ensuring the integrity of input data has led some experts to explore novel approaches to data validation and verification. One such method is known as "trusted execution environments" (TEE), which are isolated computing spaces that provide a secure environment for sensitive operations, such as cryptographic key management or executing trusted software.

Another proposed solution is the creation of an open-source, decentralized, global network of data validators, who could collaborate to verify and validate datasets before they enter the AI pipeline. This system would be akin to the existing peer-review process in scientific research but applied to raw data inputs for AI algorithms.

These proposed solutions highlight that the future success of artificial intelligence heavily depends on our ability to mitigate the risks associated with data integrity. As technology continues to advance at an unprecedented pace, so too must our efforts to safeguard against potential vulnerabilities and ensure that these powerful tools are utilized responsibly and effectively.